Rotterdam Risk Scores

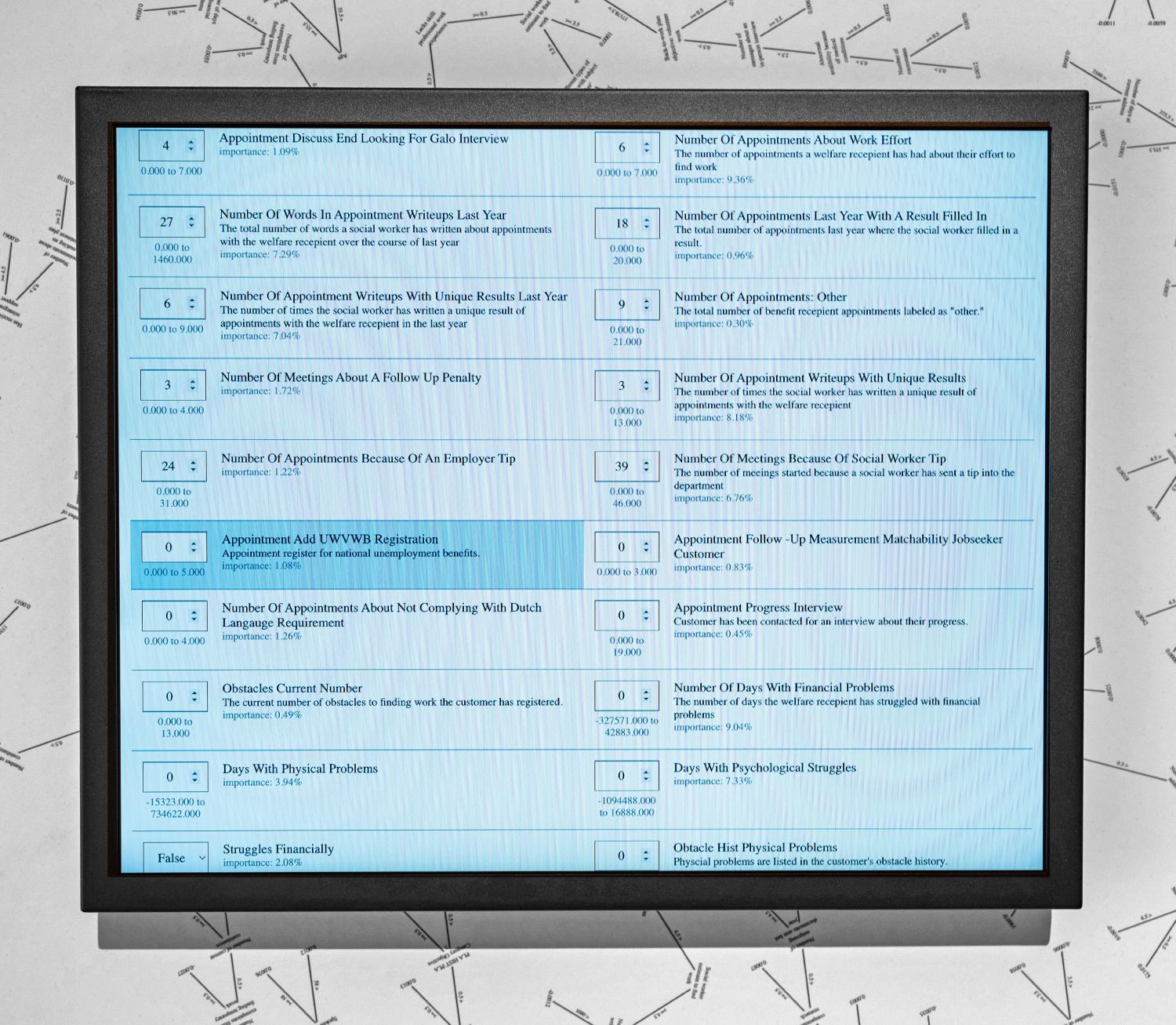

Between 2017 and 2021 the city of Rotterdam used an automated system to assign a risk score for welfare recipients. The scores were meant to act as an indicator of potential fraudulent activity, and those who received the highest or “riskiest” scores were subject to investigations.

Lighthouse Reports, in collaboration with WIRED, used freedom of information laws to gain access to the code, training data, and machine learning model that powers the system. In their investigation they discovered that, like many such attempts to automate criminal justice, Rotterdam’s model is rife with problems. Notably, the model appears to discriminate based on gender and language skill (which can be a proxy for ethnicity) in its score determinations. You can read Lighthouse’s full methodology and findings here.

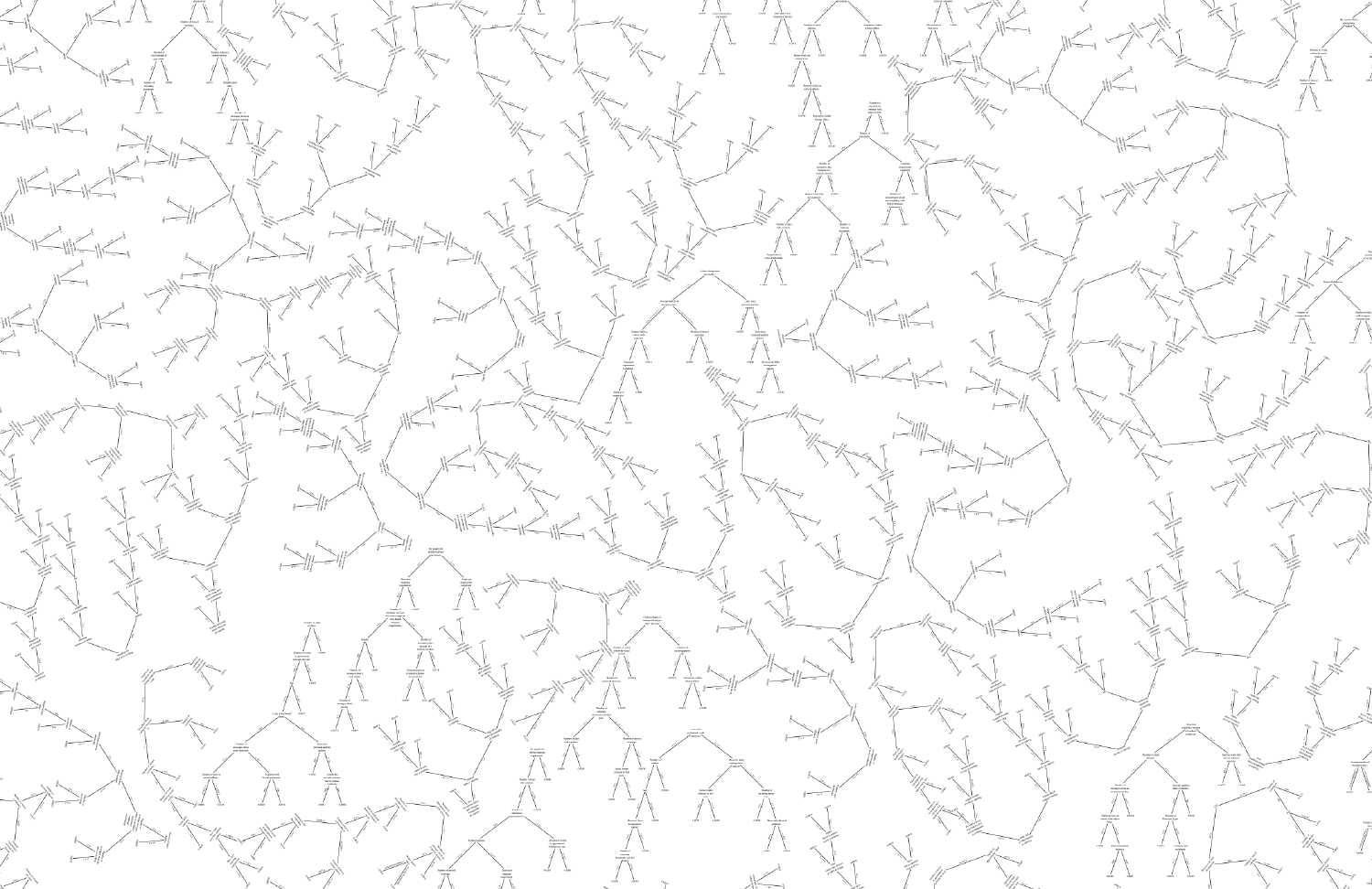

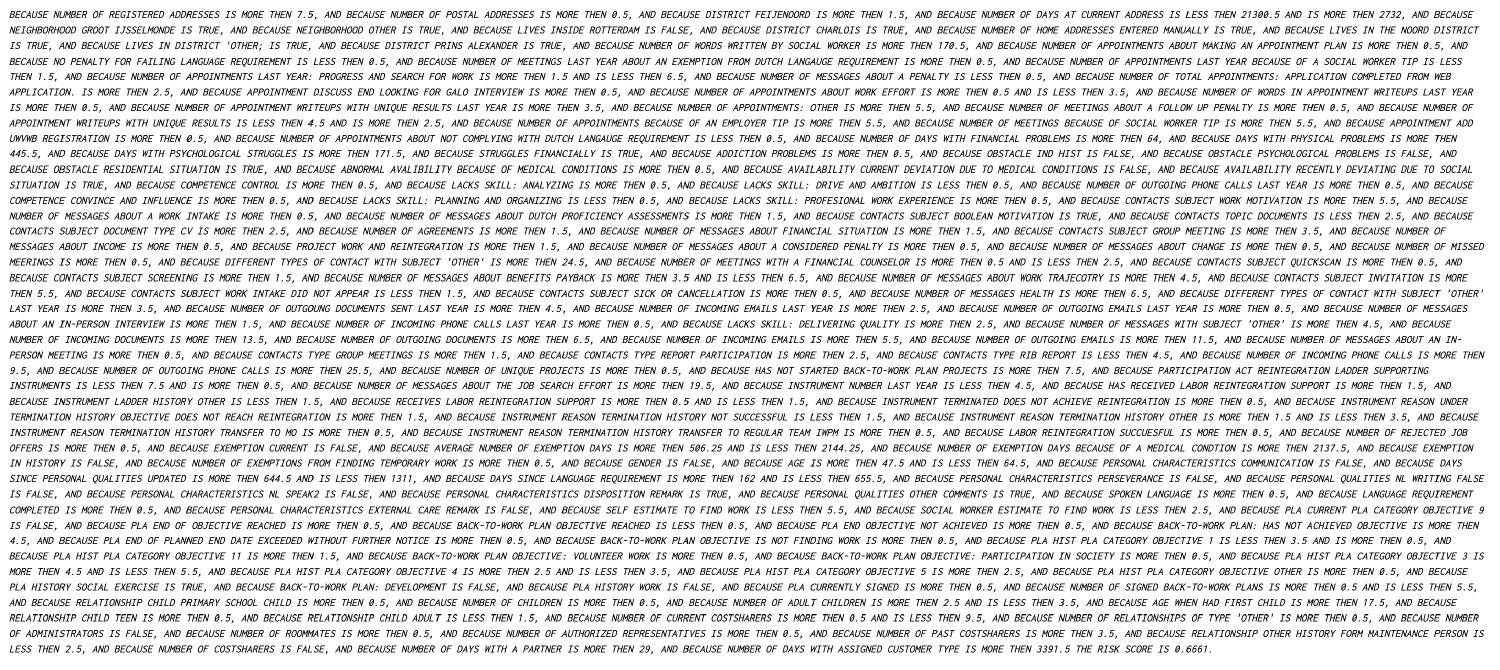

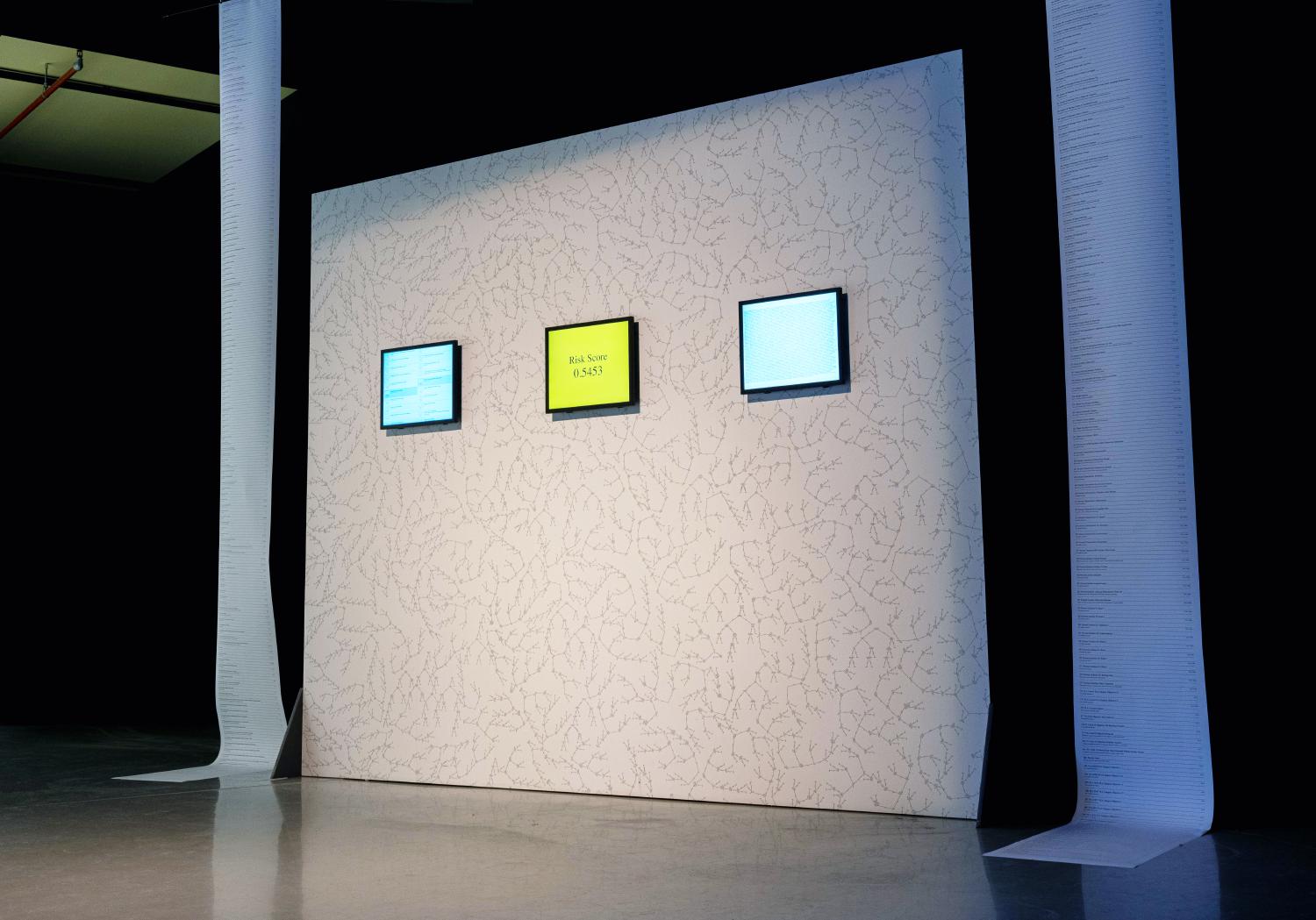

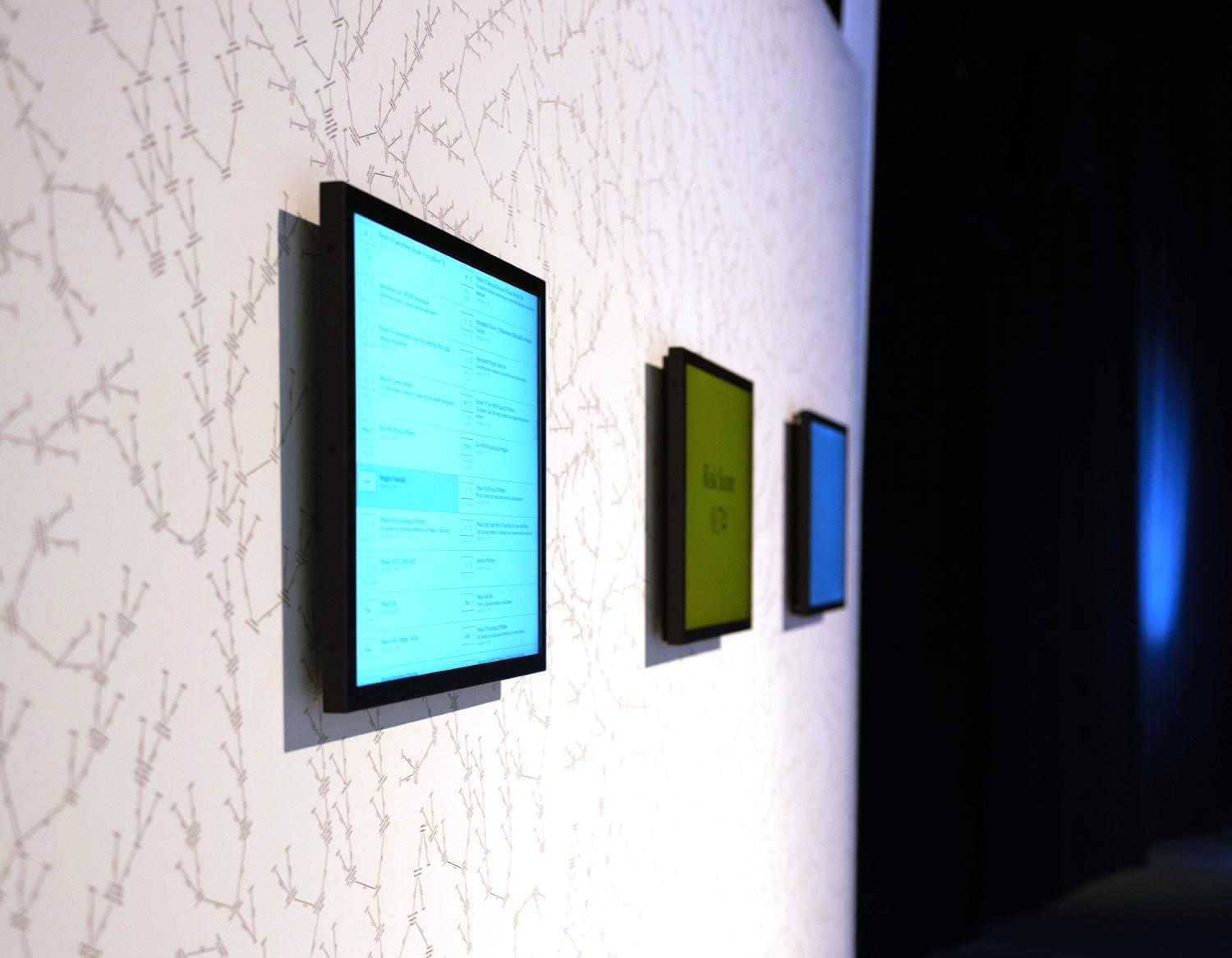

Rotterdam Risk Scores is a website that lets you explore the model in real time. The project is also realized as a physical installation.

This project was funded with generous support from the Eyebeam Center for the Future of Journalism, the Center for Artistic Inquiry and Reporting, and V2_ Gallery.

Risk Score Interactive

Photo by Fenna de Jong

Photo by Fenna de Jong

Photo by Fenna de Jong

Photo by Fenna de Jong