Blog / FFmpeg Explorer

In a fit of madness I have made a web-based GUI for FFmpeg: FFmpeg Explorer.

FFmpeg Explorer

I’m teaching a class at School for Poetic Computation this fall called Infinite Video. The goal of the class is to explore video archives with code, and I had planned to do a section on FFmpeg, the notoriously powerful and obtuse command-line media manipulator. So, I thought, what if I just made a little something to help students figure out how to use FFmpeg outside the command line – a friendlier way to introduce the tool to beginners. One week later and things have spiraled completely out of control. I’ve built a pretty functional web-based video editor that helps you generate FFmpeg commands in a visual, node-based environment. The tool lets you play around with most (but not all) FFmpeg filters, render videos in the browser (!), import your own files and/or work with demo videos, export gifs and mp4s, and it comes with a few built-in examples of the many fun things one can do with FFmpeg.

The central feature of FFmpeg Explorer is a node-based editor that allows you to chain inputs and filters together, built using the xyflow svelte library (which works great despite being in “alpha”). For simple use cases you can select a filter name and it will get automatically inserted into the graph. Clicking on a filter in the graph opens up an options panel where you can modify the filters parameters. To get this working I parsed the help output for each filter to get parameter names, mins, maxes, and default values. As you use the tool, it generates a command that you can paste into your terminal for local execution.

Simple filter editing

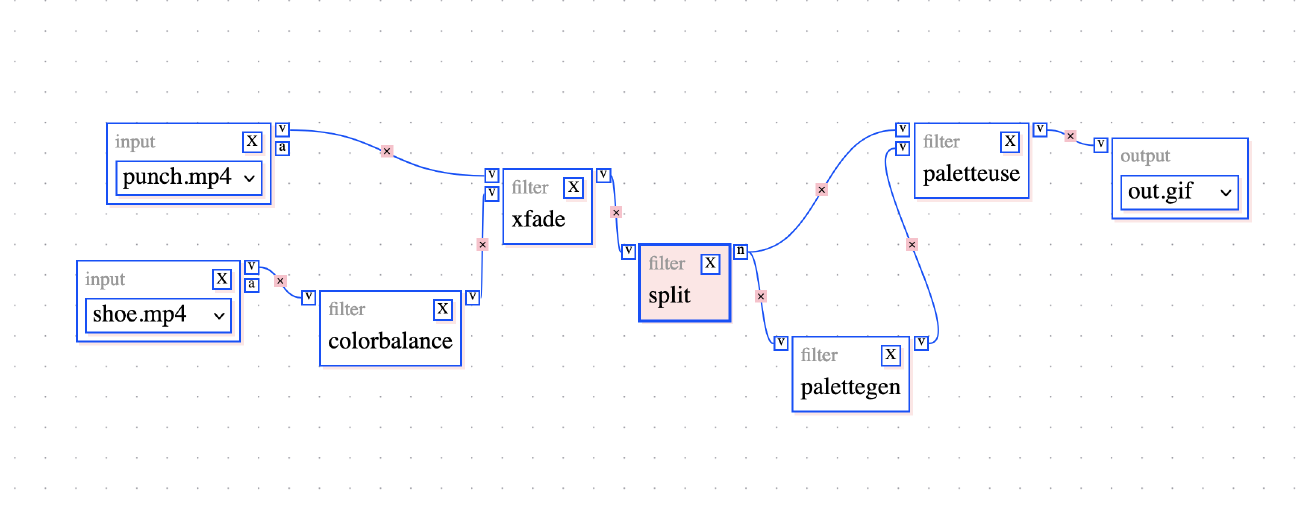

By default, the graph is locked, and new inputs/filters are automatically inserted and connected. But, for more complex use cases you can uncheck the “lock layout” box and manipulate the graph however you’d like.

A complex filtergraph

The above produces the following command:

ffmpeg -i punch.mp4 -i shoe.mp4 -filter_complex "[0:v][1:v]xfade=transition=radial:duration=3,fps=14,scale=w=400:h=-1,split[1][2];[1]palettegen[3];[2][3]paletteuse[out_v]" -map "[out_v]" out.gif

Which renders the following composition:

Sample output

The project is far from complete. There are still some issues around generating complex commands, and I have a list of planned features that you can take a look at (and even contribute to!) on the GitHub repo.

One very easy way to contribute is to make example graphs. Once you’ve made something fun, just hit cmd-s (or crtl-s on windows/linux), and the tool will export a JSON file which you can send my way.

A New Lenna

An important decision I had to make early on with FFmpeg Explorer was the choice of a sample video. The standard default clip for demonstrating anything to do with video processing has become Big Buck Bunny – a creative-commons licensed animated short about a violent conflict between an oversized rabbit and some squirrels.

The history of image processing is also a history of standard test materials. For years, the standard test image in the field was Lenna, a portrait of model Lena Forsén cropped from a Playboy centerfold. But what makes for a good “default?” How does a “standard” become standard?

When computer scientists look for images and videos to use in tests they assess quantifiable attributes that might make a particular image more or less suitable for demonstrating different algorithms. These attributes could include the presence or absence of textures, colors, motion, human faces, and so on. But, perhaps of equal importance to quantitative features, are the qualitative pleasures that an image produces in the viewer. This element of pleasure, even if it went unstated, undoubtedly contributed to Lenna’s long-standing success. Of course, the attributes and provenance of Lenna that made it visually pleasurable to the computer scientists and researchers who elevated the image to “default” status are not pleasurable to everyone.

For my part, I wanted a test video with color, with some motion, with a human face, and that embraced rather than shied away from the element of pleasure. So, on the advice of my dear friend Ingrid Burrington, I chose a famous video of a white supremacist getting punched in the face. This choice inadvertently reignited the “is it ok to punch a Nazi” discourse on Hacker News, which seemed to fall decisively and regrettably on the “no it is not” side of the debate.

Standards and defaults.

Could Richard Spencer getting punched in the face be the new default sample material for image and video processing demonstrations? Only time will tell.